The Right Linux Server Backup Strategy

If you run a website or an online business, you need to have a backup strategy to restore data in case of an emergency. Running a critical website without a backup could mean a full data loss, resulting in shutting down your company.

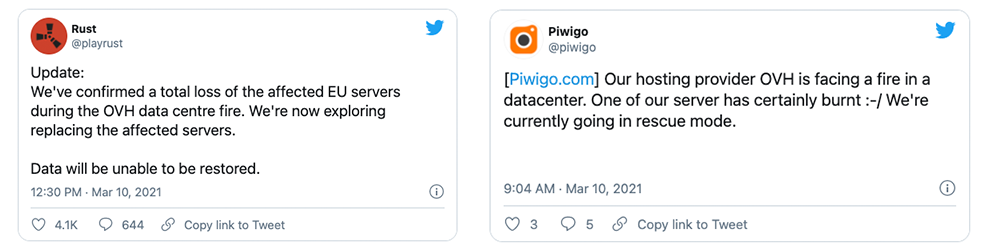

This month an OVH Data Center in Europe burned down and took million of sites and thousands of businesses down.

We at MGT-COMMERCE offer managed hosting for Magento by using Amazon Web Service for more than ten years. With the experience of managing data-critical businesses, we have developed CloudPanel and integrated Automatic Backups as Cloud Features for Amazon Web Service, Digital Ocean, and the Google Cloud.

Hope for the best and prepare for the worst

When we develop a new product, application, or service, we hope for the best, but we also think about the worst-case scenario. To prevent the worst-case scenario, you need a backup, restore, and verification strategy. One of my first mentors always said to me: "If you don't test your backups, you don't have backups."

Having full backups means that you are protected from several risks, including hardware failures, human errors, cyber attacks, data corruption, or natural disasters. For businesses seeking professional data recovery services, partnering with experts can ensure swift restoration of critical data and minimize operational disruptions.

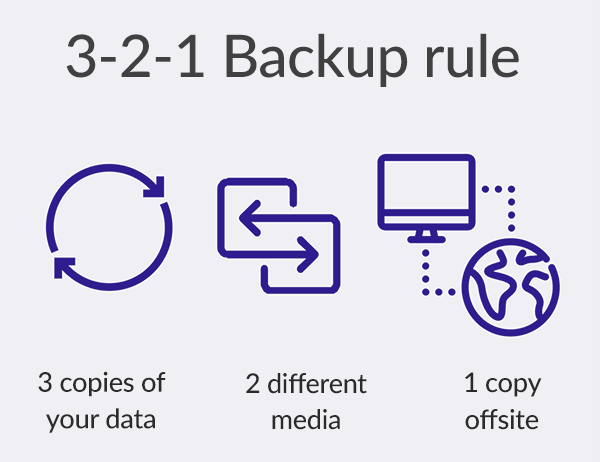

The 3-2-1 Backup Rule

The 3-2-1 Backup Rule is an easy-to-remember acronym for a common approach to keeping your data safe in almost any failure scenario. The rule is: keep at least three (3) copies of your data and store two (2) backup copies on different storage media, with one (1) of them located offsite.

In essence:

- Have at least three copies of your data.

- Store the copies on two different media.

- Keep IT safe with one backup copy offsite.

Three Copies of Data

In addition to your primary data, keeping at least two additional backups is recommended. The more backup copies you have, the less chance you have to lose all of them at once. Thus, the 3-2-1 backup rule states that you need at least three copies of your data, meaning the primary data and two backups of this data.

Two Different Media Types

It should go without saying that storing several backups of your data in the same server/instance is hardly logical. Murphy’s Law or wear and tear, or the drives were bought together and have the same mean time between failures (MTBF) rates. It is quite common after a drive failure to experience failure from another drive in the same storage around the same time.

Keeping two copies of data on different storage media types, such as internal hard disk drives plus removable storage media (tape, external hard drive, USB drive, etc.) is strongly recommended.

Keep at least one backup copy offsite

As it is evident that a local disaster can damage all copies of data stored in one place, the 3-2-1 backup rule says: keep at least one copy of your data in a remote location, such as offsite storage or the cloud.

While storing one backup copy offsite strengthens your data security, having another backup copy onsite provides for faster and simpler recovery in case of failure.

Our Backup Strategy

We at MGT-COMMERCE manage small and enterprise auto-scaling customers. The average customer is using 150 GB disk space, and the MySQL database is between 5-10 GB big.

In the company's early days, we used open-source backup software like rsnapshot for incremental data backups and a bash script for backing up the MySQL database via mysqldump every 4 hours.

This approach worked very well with small data amounts and small databases but not with projects with many data changes and big databases because the calculation of the incremental changes needs time and slows down the disk performance. Running mysqldump every couple of hours is locking and blocking the performance and can cause downtime.

For many years now, we are doing hourly backups for all of our customers by using Amazon Machine Images (AMI).

What is an Amazon Machine Image?

An Amazon Machine Image (AMI) is basically a full backup of the entire instance, including all associated disks.

Advantages:

- Easy to create and manage via AWS SDK

- Restore within minutes

- No deterioration in performance

- Stored in different data centers

- You pay for the data changes, not for the created AMIs

- Easy to copy to other AWS Regions

- Can take advantage of Amazon EBS encryption

- Market Leader - used by million of companies

Disadvantages:

- Cost estimation is difficult

- An instance (source) needs to be launched for restoring files, directories or databases

Today we are performing > 25.000 backups per day with a retention period of 7 days.

The retention period can be configured individually on the instance level of each customer.

Test your backups

You can have hourly backups or offsite backups, but they are worthless if they are not working when you need them.

CloudPanel is creating a dump of each database every night at 4:15 AM and stores them in the directory /home/cloudpanel/backups/$databaseName.

This dump is useful for updating dev, test, or staging environments where the database doesn't need to be up to date.

For many customers, we have bash scripts in place (cron jobs) that update the test/stage environment every night. We rsync the differences between test/stage and import the database from the night.

A very good approach is to have a cheap test/stage server separate from your production environment, e.g., by using another cloud provider.

On a daily basis, you rsync all files and import the latest database dump from the night.

In case of an emergency, you have multiple backups in different data centers and one offsite backup available.

Conclusion

As you have learned, having a good backup and recovery strategy is a must-have if you don't want to risk a data or business loss. Suppose you are a developer, agency, or a managed service provider. In that case, it should be one of your highest priorities because even experts with > 20 years of experience are human and make mistakes.

If you are using a managed hosting service that takes care of your application, you should ask how they perform backups and how often.

Quite often, backups are not included and need to be done by yourself.

Nevertheless, it's highly recommended to have your offsite backup because you only know how good a company is if it already happened and you always want to be prepared for the worst case.